Anti-reverse engineering – techniques that make it difficult to reverse-engineer malware (malicious software).

Reverse engineering refers to methods of analysing a compiled program without access to its source code. In this article I would like to describe methods which are used by creators of malicious software to hinder the analysis of viruses and other malicious software (known as malware), and I will explain how antivirus companies and antivirus software deal with this.

In order to make analysis difficult for antivirus companies, first you need to know how antivirus companies analyse malware “in the lab”. So, what are the most common approaches that antivirus companies use?

- Testing malware in a virtual environment

- Testing malware in sandboxes and emulators

- Monitoring changes that are made to the system

- Static analysis

- Dynamic analysis

- Generating signatures of the malware

Meanwhile, antivirus software (running on a user's computer) can perform the following checks and use them to help assess the actual intentions of a given malicious program:

- Checksums of files and fragments (e.g. MD5, SHA1, SHA2, CRC32)

- Unusual file structure

- Unusual valuesin the files

- Signatures of file fragments (heuristic methods)

- Strings constants

- Application behaviour, known as behavioural analysis (monitoring access to the system files, the Windows Registry, etc.)

- Function calls (which functions are called, what arguments are supplied, the order of calls)

At each of these steps, both during analysis “in the lab” as well as by antivirus software, it is possible to encounter obstacles specially designed to prevent or slow down the analysis.

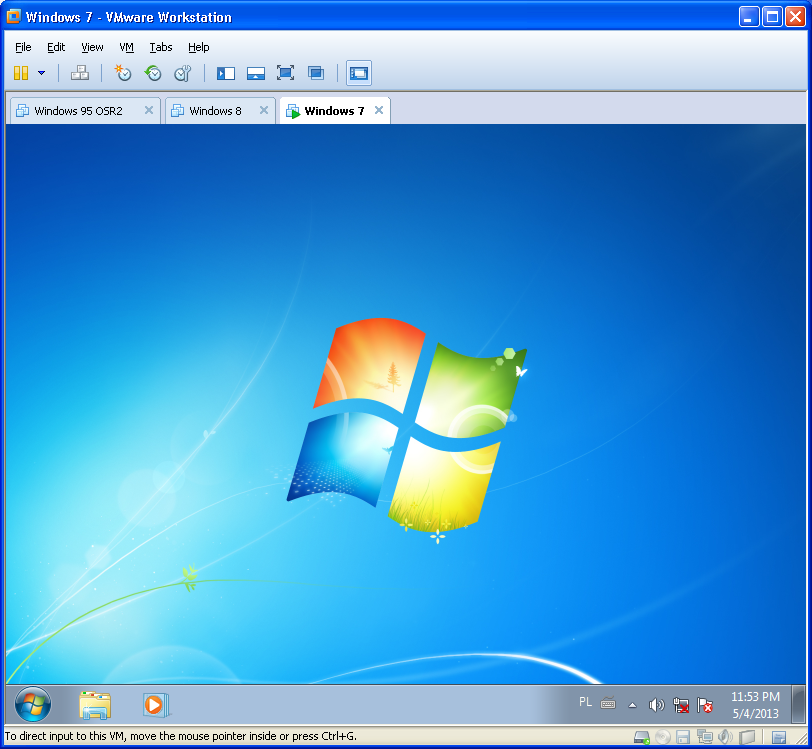

Detection of virtual machines

In 99% of cases, malicious software is tested in virtual machines, for instance VMware, VirtualBox, Virtual PC, Parallels, etc. This is to protect analysts from infecting their own machines, which does happen sometimes! In 2011, due to an employee's mistake at the antivirus company ESET, the well-known (and expensive) analysis software package IDA was stolen along with the HexRays decompiler.

Normally in virus labs, malicious software is stored in folders without execute permissions, specifically to prevent accidental execution of infected or malicious files. The use of virtual machines also allows additional tools to be employed, for example it is easy to compare the system image before infection with the system image after infection, so that even tiny changes to system files, the Windows Registry, and other system components can be quickly uncovered.

Running malware on virtual machines also allows accurate tracking of network traffic (by employing network sniffers like Wireshark), which for example may reveal that the malware communicates with servers controlling a botnet to which the malware belongs.

For these reasons, malware authors often wish to prevent their malware from activating if run on a virtual machine, but to do that, the malware needs to detect that it is being run in a virtual environment. Virtual machines are not a perfect representation of a real computer, and so have characteristics that can “give them away”, for example:

- Virtual hardware devices with specific names that only appear in virtual machines

- Incomplete or limited emulation of features of real machines (such as IDT and GDT tables)

- Obscure or undocumented APIs to communicate with the host layer

(e.g. Virtual PC uses the assembly instruction

cmpxchg8b eaxwith magic values in the processor registers to determine its presence) - Additional utilities, such as VMware Tools, whose presence can be given away by things like the names of particular system objects (the names of mutexes, events, class names, window names, etc.)

Listing 1.Identifying a VMware environment using an API interface.

BOOL IsVMware()

{

BOOL bDetected = FALSE;

__try

{

// check for the presence of VMware

__asm

{

mov ecx,0Ah

mov eax,'VMXh'

mov dx,'VX'

in eax,dx

cmp ebx,'VMXh'

sete al

movzx eax,al

mov bDetected,eax

}

}

__except(EXCEPTION_EXECUTE_HANDLER)

{

// if an exception occurs

// return FALSE

return FALSE;

}

return bDetected;

}Listing 2.Identifying a virtual environment by entries in the Windows Registry.

BOOL IsVM()

{

HKEY hKey;

int i;

char szBuffer[64];

char *szProducts[] = { "*VMWARE*", "*VBOX*", "*VIRTUAL*" };

DWORD dwSize = sizeof(szBuffer) - 1;

if (RegOpenKeyEx(HKEY_LOCAL_MACHINE, "SYSTEM\\ControlSet001\\Services\\Disk\\Enum", 0, KEY_READ, &hKey) == ERROR_SUCCESS)

{

if (RegQueryValueEx(hKey, "0", NULL, NULL, (unsigned char *)szBuffer, &dwSize ) == ERROR_SUCCESS)

{

for (i = 0; i < _countof(szProducts); i++)

{

if (strstr(szBuffer, szProduct[i]))

{

RegCloseKey(hKey);

return TRUE;

}

}

}

RegCloseKey(hKey);

}

return FALSE;

}Listing 3.Detecting Virtual PC.

sub eax,eax ; prepare magic values

sub edx,edx ; in registers

sub ebx,ebx

sub ecx,ecx

db 0Fh, 0C7h, 0C8h ; 'cmpxchg8b eax' instruction

; if Virtual PC is present, after performing cmpxchg8b eax

; register EAX = 1 and there will be no exception

; otherwise an exception will occurListing 4.Detecting execution in VirtualBox.

BOOL IsVirtualBox()

{

BOOL bDetected = FALSE;

// is the VirtualBox helper library

// installed in the system?

if (LoadLibrary("VBoxHook.dll") != NULL)

{

bDetected = TRUE;

}

// is the VirtualBox support device

// installed in the system?

if (CreateFile("\\\\.\\VBoxMiniRdrDN", GENERIC_READ, \

FILE_SHARE_READ, NULL, OPEN_EXISTING, \

FILE_ATTRIBUTE_NORMAL, NULL) \

!= INVALID_HANDLE_VALUE)

{

bDetected = TRUE;

}

return bDetected;

}Ironically, the mere presence of functions used to detect a virtual environment can alert an analyst that he may be working with a malicious program which is trying to avoid being analysed within a safe environment. Still, such functions are commonly used by copy protection and content protection software. For example the player used in the popular multimedia platform Ipla.tv refuses to play live programs if it detects that it is running in a virtual environment. For interest's sake, I'll mention that the library used by this player is easily accessed and it's reasonably easy to disable the function responsible for detecting a virtual environment with the help of a debugger.

Some software publishers also block the ability to launch their applications in a virtual machine for a very practical reason – piracy. They “lock” the software to the hardware it is installed on (e.g. by generating the licence key using the machine's hardware profile). When software is installed in a virtual machine, it is easy to copy the virtual machine image to as many physical computers as desired, and all of these virtual machines will have an identical hardware profile, thus allowing the software to run on multiple computers.

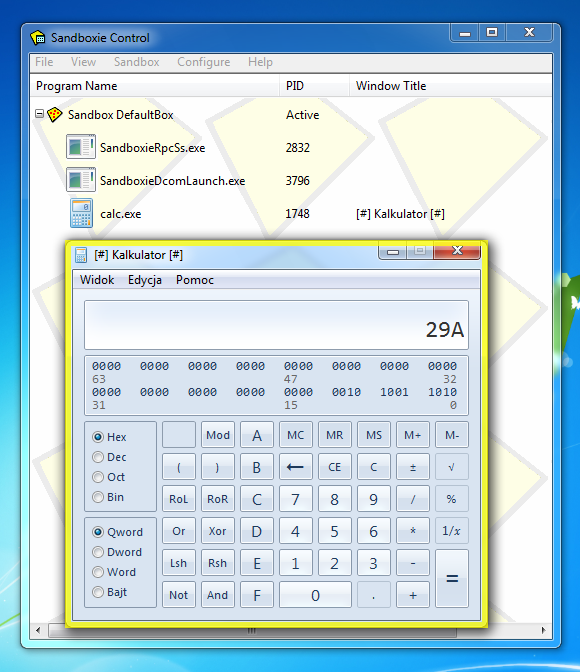

Sandboxes

A sandbox, in short, is a safe environment, isolated from the outside world, within which you can run malicious programs and monitor their activity. Sandboxes can appear as separate to the host system. The most well-known are:

- Cuckoo Sandbox

- Anubis Sandbox

- Norman Sandbox

- Joe Sandbox

- VIPRE ThreatAnalyzer

- Buster Sandbox Analyzer

They are virtual environments which allow any software to be run, and thanks to built-in monitoring tools, they provide detailed logs of all changes the software made to the system after being launched. Generally they are based on an emulated Windows environment, and possess characteristics which allow them to be detected easily. On underground forums one can find plenty of examples of the detection of such environments, e.g.:

Listing 5.Detecting execution within a Norman Sandbox environment.

; load the address of the function DecodePointer(), which

; in a Norman Sandbox environment contains

; a different list of instructions

; to a real Windows system

; DecodePointer:

;.7C80644E| C8 00 00 00 enter 0,0

;.7C806452: 8B 45 08 mov eax,[ebp][8]

;.7C806455: 0F C8 bswap eax

;.7C806457: C9 leave

;.7C806458: C2 04 00 retn 4

; load the address of DecodePointer

mov eax,DecodePointer

test eax,eax

je _not_detected

; verify the byte signature

cmp dword ptr[eax],000000C8h

jne _not_detected

cmp dword ptr[eax+4],0F08458Bh

jne _not_detected

cmp dword ptr[eax+8],04C2C9C8h

jne _not_detected

; the presence of the Norman Sandbox environment has been detected

; terminate the application

push 0

call ExitProcess

; continue the application

_not_detected:The majority of the sandboxes listed used to be free of charge, however several have now been commercialised and only a few continue to be free, among them Anubis Sandbox.

Sandboxes do not need to emulate complete systems. Sandboxes integrated into antivirus software, or application-specific sandboxes like for instance Sandboxie are becoming more popular. They allow safe execution of any application within an operating environment which protects sensitive system components from modification.

Sandboxie does not write modifications to disk, nor to any system files, just to external files which can be analysed afterwards. The operation of such systems relies on global system hooks (overriding low-level operating system functions), as well as the use of extra drivers which control the behaviour of the application. As you might guess, these mechanisms and components are exploited by creators of malware to detect tools like Sandboxie.

Listing 6.Detection of execution in a Sandboxie environment.

BOOL IsSandboxie()

{

// check if the Sandboxie helper library

// is loaded in our process

if (GetModuleHandle("SbieDll.dll") != NULL)

{

return TRUE;

}

return FALSE;

}Hampering code analysis

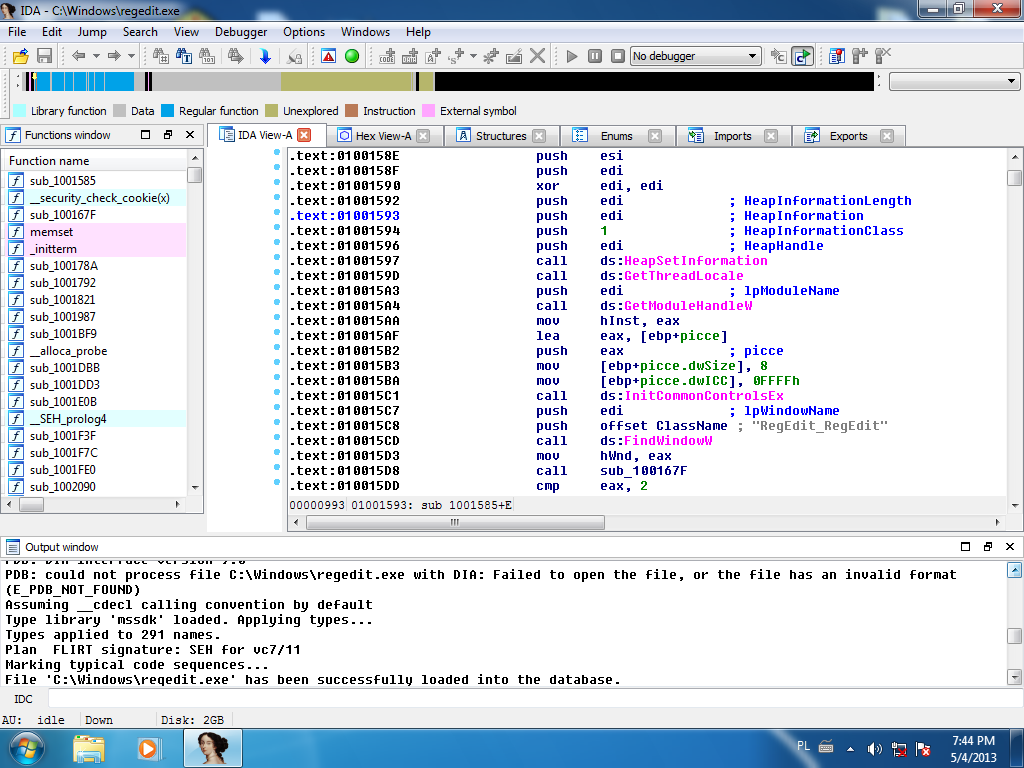

Static analysis of code is performed using tools like the IDA disassembler and the HexRays decompiler (which are the de facto standard within antivirus companies) to analyse suspected files. These tools allow the analysis of compiled applications at the assembly level, and, if possible, at the level of a high-level language.

In order to perform static analysis with these tools, it is necessary to have access to unencrypted executable files. Malware authors can use tools which make this analysis difficult or impossible, unless extra work is done.

Crypters

Crypters are the most basic tool used to encrypt entire executable files. They are created with one purpose – to prevent a malware file from being detected by antivirus programs. They are routinely traded on underground forums for tens of dollars and each client receives a unique copy, in order to avoid being detected by the existing signature database of antivirus programs.

The basis of a crypter's operation is as follows:

- Encrypting the entire executable file

- Attaching it to a loader program, either as an embedded resource, or at the end of the file (a so-called overlay)

After launching a file which has been prepared in this way, one of two things can happen, depending on how much effort the author put in. In the simple case, the file is decrypted, unpacked into a temporary directory, and launched from there. In the advanced case, the code and data sections of the loader are unmapped from memory and replaced with the sections of the decrypted executable file, after which the code is launched.

Exe-Packers

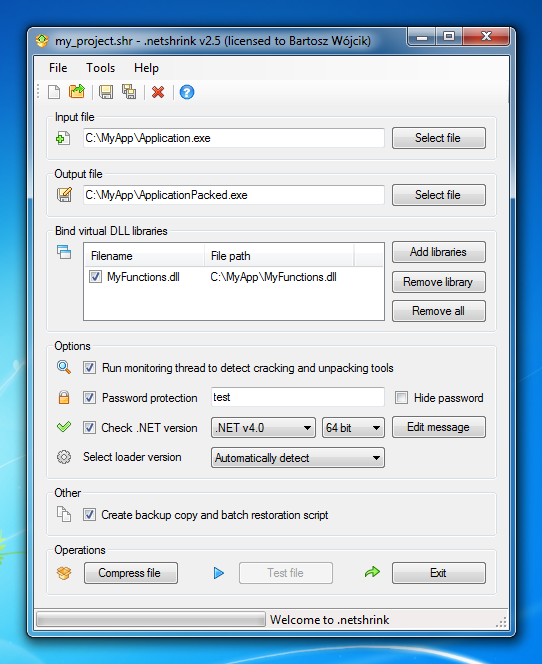

Exe-packers such as UPX, FSG, MEW, ASpack, and .netshrink have long been used to reduce the file size of executable programs. They work by pulling apart the structures of an executable file, running them through a compression algorithm, and wrapping them in a loader program. The loader program is typically small and written in assembly language. When such a packed file is launched, the loader takes control, decompresses the code and data, restores the structure of the executable file (for instance, loading functions from an import table), and then starting the original application code from its usual entry point.

This kind of compression does not really pose a problem for analysis of the code. The only thing it achieves is changing potential signatures in antivirus programs, which currently are capable of automatically decompressing the most popular exe-packers (whether by the use of dedicated decompression modules, or through the use of emulation) and analysing the original file.

Scramblers

Although exe-packers only serve to reduce the size of executable files, their popularity resulted in individuals who wanted to use exe-packers and simultaneously render it more difficult to access the original executable code. Thus scramblers were born. They operate by “scrambling” the already-compressed file. For example, they might change section names and add new entry code, which only then launches the decompressor. Examples of this include UPX-SCRAMBLER and UPolyX, for the popular compressor UPX.

Exe-protectors

Exe-protectors like PELock, ASProtect, ExeCryptor and Armadillo are the next stage in the evolution of exe-packers. These not only compress the executable, but also add code to detect the presence of debugging tools, and corrupt or hide the true structure of the executable, in order to make it yet more difficult to recover the contents of the original executable. A crucial part of exe-protectors is a built-in licence key system based on strong cryptographic algorithms, like ECC (Elliptic Curve Cryptography) or RSA encryption.

A popular protective technique used by exe-protectors is the encryption of specific code regions, which are placed between special markers. These regions are encrypted when the application is passed through the exe-protector. When the application is launched, the code between the markers cannot be decrypted without the correct licence key; even then the code remains encrypted until the moment it needs to run, at which point it is temporarily decrypted, and returns to its encrypted form once it has finished running.

Listing 7.Example of the use of encryption markers in the PELock exe-protector.

#include <windows.h>

#include <stdio.h>

#include <conio.h>

#include "pelock.h"

int main(int argc, char *argv[])

{

// code between the markers DEMO_START and DEMO_END

// will be encrypted in the protected file

// and will not be accessible (or executed) without

// the correct licence key

DEMO_START

printf("Welcome to the full version of my application!");

DEMO_END

printf("\n\nPress any key to continue...");

getch();

return 0;

}In time the level of sophistication of exe-protectors led to the analysis of protected files becoming a real problem for crackers (i.e. people who try to break into software or computer systems). Although this was good for software authors in general, it made life difficult for virus analysts, since it allowed malware authors to use highly advanced encryption techniques to make analysis of malicious programs very difficult. Frequently in such systems you will find Polymorphic encryption algorithms (unique encryption algorithms generated anew each time a file is protected), code mutations (where a series of assembly instructions in the file is replaced with a sequence of complicated but equivalent instructions), code virtualisation (native code is replaced by bytecode), along with a whole collection of new anti-debug tricks, rendering it very difficult to analyse the code and learn how it works.

The protections implemented in exe-protectors include:

- redirection of import tables – obscuring the true addresses of functions which are used by the application – this makes it difficult to reconstruct the import table of a protected file

- relocating the image of the file into a random region of memory – providing protection against obtaining a dump of the decrypted executable from memory

- detection of debugging and system monitoring tools

- active monitoring of application code with the aim of discovering changes made to the code by, e.g., trainers (in games)

- detection of operation within a virtual environment or sandbox

- detection of emulators

- hiding the structure of, e.g. resources, by emulating functions that provide access to these things

- combining many application files and libraries into a single executable file and emulating their presence using hooks for accessor functions

Virtualizers

Virtualizers like CodeVirtualizer and VMProtect are the successors to exe-protectors. These tools allow an application's native code (or fragments of the code, delimited by special markers) to be replaced with bytecode. Files which have been protected in this way are equipped with virtual machines to execute this bytecode.

It is very difficult to analyse applications wherein part of the code has been replaced by bytecode. This is because they cannot be analysed by standard tools like IDA and OllyDbg, seeing as these tools do not understand the format of the intermediate code. Advanced virtualizers are able to generate a unique bytecode language every time they protect an executable, further complicating the analysis of protected applications.

In these situations, special modules must be written to translate the intermediate code into e.g. x86 code. It's a painstaking and time-consuming task – malware protected in this way is exceptionally difficult to analyse, seeing as all the other protection methods present in exe-protectors can be employed as well.

Obfuscators

Obfuscators (from obfuscate – to make obscure) are tools whose purpose is to render the analysis of either compiled applications, or source code, as difficult as possible.

Obfuscators are mostly used to protect applications which are compiled to bytecode, like Java and .NET applications. There are also examples like the Pythia obfuscator for Delphi applications, however the majority of these tools are used for .NET applications. Originally the .NET platform came forth from the ashes of the Visual Basic language. Programs created in Visual Basic up to version 6 could be compiled both to native code (x86) and to a bytecode language which required additional libraries and a virtual machine runtime. The specification of the Visual Basic virtual machine was not published and analysis of these applications was challenging. However, through trial and error, some unofficial tools were created which allowed the decompilation of such applications.

With the appearance of C# and VB.NET on the market, Microsoft published the specification of the virtual machine. It turned out that decompiling the bytecode of such applications was fairly easy! Well-known tools like .NET Reflector arose, which allowed the source code of proprietary applications to be recovered in one click. This was a problem for software authors, and it wasn't long before dozens of tools were on the market allowing the obfuscation of the bytecode (intermediate language) comprising .NET applications. These obfuscators employed techniques such as:

- Modifying the execution order of sequences of instructions (from a linear format to a nonlinear arrangement involving many unconditional jumps)

- Dynamic encryption of .NET code

- Encrypting the resources of .NET applications

- Encrypting string values

- An extra layer of virtualisation of the compiled code

- Intentional corruption of the file structures of .NET applications

There is now a plethora of source-protection applications, which mostly use similar protection mechanisms. It is said that every action produces a reaction; the glut of obfuscation tools led to the appearance of many purpose-built de-obfuscators, culminating in the appearance of de4dot in 2011, which is a universal unpacker for protected .NET applications. It is capable of de-obfuscating the output of over 20 of the most popular obfuscators, including SmartAssembly, .NET Reactor, Dotfuscator, Eazfuscator, and many others. This is a real slap in the face for the software security industry. de4dot is actively developed and extended to support new source protectors.

Interestingly, the prices of obfuscators are significantly higher than those of software which protects native applications, which is strange in that the .NET platform is publicly documented, and the level of sophistication of (and expertise required for) native exe-protectors and virtualizers significantly exceeds the level of protection employed in obfuscators. The best example is the previously mentioned de4dot, which in one click can reverse the majority of protections which are applied to .NET applications. No similar universal tools exist for protected native applications.

Conflicts of interest

The situation surrounding application protection has become so serious both for creators of protection systems and antivirus companies that legitimate, legal copies of protected programs are often classified as viruses by antivirus software (known as “false positives”). The authors of protection systems lose potential customers (or rather, their existing customers lose their customers), while antivirus companies struggle with the analysis of actually malicious programs which are protected by the same protection systems. The IEEE Standards Association is attempting to standardise the exchange of information between the creators of protection software and antivirus companies. The system is called TAGGANT and will require every file protected with a commercial protection system to be tagged with a signature of the individual or business which purchased the protection package. Files tagged in this way would no longer be flagged as false-positives. If a legitimate protection package is somehow used to protect a malicious program (e.g. if a malware author purchases a protection package using stolen credit card details), the client's signature will be placed on a publicly-available blacklist and every signature generated from that point will be flagged as a potentially malicious program.

Debugger detection

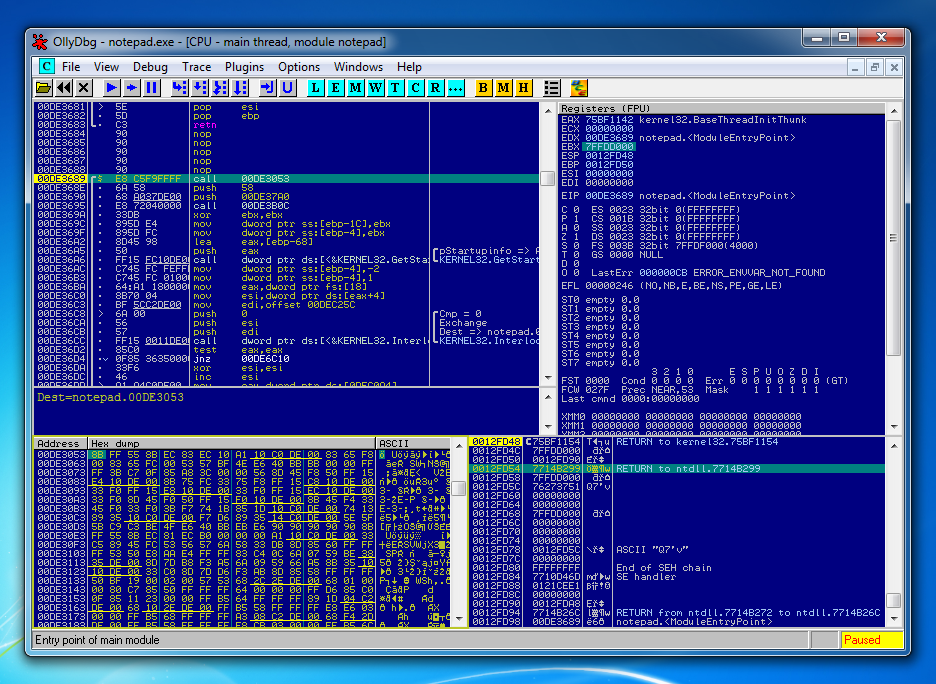

If a malicious program is more complicated and static analysis of the code in a tool like IDA (a disassembler) or HexRays (a decompiler) does not allow an analyst to determine exactly what the malicious program does, the next tool an analyst can use is a debugger – a tool which allows the execution of a program to be traced step by step.

Among the most popular debugging tools are: the debugger built into the commercial IDA disassembler (for 32 and 64-bit code), the free WinDbg, and the free, and to my knowledge most popular debugger, OllyDbg, which allows tracing of the application in user mode (the one drawback of OllyDbg is that it is limited to 32-bit applications).

The popularity of the OllyDbg debugger led to a range of modifications and extensions which hide its presence from anti-debug functionality, and make the analysis of all sorts of applications much easier, for instance by introducing a scripting language which can automate all the functions the debugger is capable of. This makes it easy to, e.g., automatically unpack all sorts of known protection methods.

On the other hand, there are plenty of methods which can be used to detect this debugger, and the creators of malicious software are all too happy to use them, since their code will be harder to analyse.

Listing 8.Detection of OllyDbg (author Walied Assar).

int __cdecl Hhandler(EXCEPTION_RECORD* pRec,void*,unsigned char* pContext,void*)

{

if (pRec->ExceptionCode==EXCEPTION_BREAKPOINT)

{

(*(unsigned long*)(pContext+0xB8))++;

MessageBox(0,"Expected","waliedassar",0);

ExitProcess(0);

}

return ExceptionContinueSearch;

}

void main()

{

__asm

{

push offset Hhandler

push dword ptr fs:[0]

mov dword ptr fs:[0],esp

}

RaiseException(EXCEPTION_BREAKPOINT,0,1,0);

__asm

{

pop dword ptr fs:[0]

pop eax

}

MessageBox(0,"OllyDbg Detected","waliedassar",0);

}Years ago, there was a famous debugger called SoftICE. It was a system debugger (i.e. it allowed both user-mode applications as well as system drivers to be traced). It has been replaced by Microsoft's WinDbg, which has been developed and updated for many years. System debuggers (or kernel mode debuggers) are employed during analysis of applications that make use of system drivers. One example of this is rootkits, which are components of malicious software whose main purpose is to bury their payload in the operating system (usually starting out from user mode), for instance by hiding the malware processes, or hiding its files by tampering with the structure of the filesystem or network traffic.

Frequent updates

Malicious software can be detected using many methods, ranging from checksums of entire files, to advanced signatures made from file fragments, characteristic strings, the order of function calls, the use of uncommon functions, behavioural analysis of the software, etc. A combined assessment of these traits can result in an antivirus program classifying the file as malicious (or not).

Such decisions assume the malware will exhibit similar traits every time. Modifying some of these traits will mean that a malicious program may evade detection. In what ways can such changes be made, without the effort of modifying the malware's source code?

- Change of compiler – the simplest trick. Compilers for C/C++, Delphi and Visual Basic are plentiful and supplying the source code to a different compiler is usually not difficult (unless the program makes heavy use of features specific to a particular compiler)

- Changes to compile options, such as turning optimisations on or off, or changing the default calling convention, instantly change the structure of the output file

- Frequent updates, in conjunction with changes in compile options, lead to resulting files which are quite different at the binary level, even though they exhibit the same functionality.

Function substitution

“All roads lead to Rome,” so the saying goes. When it comes to malware, there are many ways to reach the same outcome. For instance, to obtain access to a file, many Windows system functions and additional library functions can be used:

| Library | Function name | Description |

|---|---|---|

| KERNEL32.dll | CreateFileA | ANSI version of function to open a file |

| KERNEL32.dll | CreateFileW | UNICODE version |

| KERNEL32.dll | CreateFileTransactedA | Transactional version |

| KERNEL32.dll | CreateFileTransactedW | UNICODE version |

| MSVCR100.dll | fopen | Standard function from the CRT library |

| MSVCR100.dll | fopen_s | Safe version of fopen |

… and there are dozens of other libraries which provide similar functions that can be used as substitutes.

Listing 9.Example showing several methods to read a file.

#include <windows.h>

#include <stdio.h>

// mode used to open a file

#define FILE_MODE 3

int main()

{

#if FILE_MODE == 1

HANDLE hFile = CreateFileA("notepad.exe", GENERIC_READ | GENERIC_WRITE, 0, NULL, OPEN_EXISTING, 0, NULL);

#elif FILE_MODE == 2

HANDLE hFile = CreateFileW(L"notepad.exe", GENERIC_READ | GENERIC_WRITE, 0, NULL, OPEN_EXISTING, 0, NULL);

#elif FILE_MODE == 3

FILE *hFile = fopen("notepad.exe", "rb+");

#else

//...

#endif

// close file handle

#if FILE_MODE < 3

CloseHandle(hFile);

#else

fclose(hFile);

#endif

return 0;

}The use of a particular group of functions may be a “red flag” during analysis, however modifying a program in this way creates a whole new code structure, which can help the malware evade detection.

Scripts instead of programs

Instead of directly calling system functions, certain tasks can be performed by scripts written in languages like VBScript or JScript. Malicious software also sometimes contains interpreters for scripting languages like Lua (as used by the Flame virus), Python (for instance IronPython) or even PHP (the PH7 engine). Once the source code is isolated, it is often easier to analyse than compiled code, but the sheer number of different languages that malware authors can choose creates a headache for virus analysts and antivirus companies.

Modular design

Malicious software isn't always installed all at once. Often the infectious module is quite small in size, and after infection the module connects to a control server and downloads additional modules. This design also makes it easy to add new features to the software or repair problems within it. For an analyst, things are much easier when the malware is all contained in one file. When malware is split up into many different libraries, analysis is slowed down, especially if different modules are written using different programming languages and each is protected with a different system.

Niche languages

Malicious software tends to be written in the most popular languages, namely C/C++, Delphi, VisualBasic, and C#. In my opinion this is a result of two things: first, the ease with which such software can be run on many versions of Windows (without the need to install additional system components), and second, the large availability of tutorials, discussion groups, and source code examples pertaining to these languages, making it relatively easy for even novice developers to create malicious programs.

Certain malware creators have gone a step further, though, realising that using popular languages makes their software easy to analyse, because of all the tools which exist for these languages. Virus analysts deal with popular languages on a daily basis, so they are equipped with all the relevant tools, and have no problem with splitting such programs into their basic parts.

On the other hand, programs written in niche programming languages, for instance functional languages or business-oriented languages, are more challenging for analysts. Here are some examples:

- Haskell

- Lisp

- VisualFox Pro

- Fortran

- COBOL

- Exotic Visual Basic compilers (e.g. DarkBasic, PureBasic etc.)

Such programs often have a rather complicated structure; in many cases their entire code is in the form of an intermediate language (or bytecode), or requires many megabytes of additional libraries which are tough to analyse, not to mention time-consuming. There are a few dedicated tools to decompile programs written in some of these languages, e.g. VisualFox Pro applications can be decompiled using ReFox, but such tools are reasonably rare in this space because there is simply not much demand.

The famous Stuxnet virus was created partially using an obscure object-oriented framework for the C language, and analysts had a tough time figuring out what language the worm was written in, given the unusual structure of the code.

At the end of this article you can find a reference to an illustrative program called CrackMe – created specially to be reverse-engineered. It is written in the Haskell language, and isn't particularly easy to analyse, despite being a simple algorithm – a few lines of code which check the validity of a licence key.

Automation software

There are software packages on the market that allow people with limited technical skills to create compiled programs with full access to system files, loading components from the network and accessing the Windows Registry. I'm referring to programs like:

Applications created with these tools are less likely to be detected, because their code is generally in the form of an intermediate language and special decompilers are needed to analyse their behaviour.

Source modification

Source modification is one of the most advanced methods of creating unique output files. So, what sort of changes can be made to source files which produce different output files?

- Mutation of source codes e.g. by the use of templates

- Reordering functions in source files

- Varying the optimisation options of individual functions

- Introducing dummy parameters to functions and faking their usage (compiler optimisations can remove unused parameters)

- Introducing spurious constructions between lines of code (e.g. junk instructions which perform unnecessary tasks, instructions which jump around between each other, unnecessary verification of function parameters, and unnecessary references to local variables)

- Nonlinear code execution (e.g. by using

switchstatements) - Changes to structure definitions, i.e. random rearrangement of the members of data structures or the introduction of dummy fields

All these modifications can produce major changes in the compiled output file.

Listing 10.Junk instructions in Delphi code.

procedure TForm1.FormCreate(Sender: TObject);

begin

// junk instructions (these instructions

// do not have any impact on the behaviour

// of the application)

asm

db 0EBh,02h,0Fh,078h

db 0EBh,02h,0CDh,20h

db 0EBh,02h,0Bh,059h

db 0EBh,02h,038h,045h

db 0E8h,01h,00h,00h,00h,0BAh,8Dh,64h,24h,004h

db 07Eh,03h,07Fh,01h,0EEh

db 0E8h,01h,00h,00h,00h,03Ah,8Dh,64h,24h,004h

db 0EBh,02h,03Eh,0B8h

end;

// This line will be unreadable by

// a disassembler, thanks to

// the junk instructions

Form1.Caption := 'Hello world';

asm

db 070h,03h,071h,01h,0Ch

db 0EBh,02h,0Fh,037h

db 072h,03h,073h,01h,080h

db 0EBh,02h,0CDh,20h

db 0EBh,02h,0Fh,0BBh

db 078h,03h,079h,01h,0B7h

db 0EBh,02h,094h,05Ch

db 0C1h,0F0h,00h

end;

end;One of the most advanced examples of source code modification that I have seen is the protection employed by Syncrosoft in the MCFACT system which is used to protect the well-known audio suite Cubase made by the company Steinberg. The source code is first analysed and then transformed into protected form.

Listing 11.Mutation of C++ code (before and after) by the MCFACT system.

//MCFACT_PROTECTED

unsigned int findInverse(unsigned int n)

{

unsigned int test = 1;

unsigned int result = 0;

unsigned int mask = 1;

while (test != 0)

{

if (mask & test)

{

result |= mask;

//MCFACT_AUTHORIZED

test -= n;

}

mask <<= 1;

n <<= 1;

}

return result;

}

// code after protection

unsigned int findInverse(unsigned int n)

{

...

class _calculations_cpp_test_0 test=((_init0_0::_init0)());

class _calculations_cpp_result_0 result=((_init1_0::_init1)());

class _calculations_cpp_mask_0 mask=((_init2_0::_init2)());

_cycle1:{

signed char tmp8;

((tmp8)=((IsNotEqual)((test), (_calculations_cpp_c_0_0))));

if ((tmp8)) {

{

unsigned int tmp7;

((And)((mask), (test), (tmp7)));

if ((tmp7)) {

((Or)((result), (mask), (result)));

((Sub)((test), (n), (test)));

}

((ShiftLeftOne)((mask), (mask)));

((n)<<=(1U));

}

goto _cycle1;

}

}

{

unsigned int tmp9;

((Copy)((result), (tmp9)));

return(tmp9);

}

}Analysing such code poses quite a challenge. The protection was eventually broken by the warez group “Team-AiR”, yet according to what was written in the NFO (an info file distributed with cracked software), the analysis took 4000 hours – almost half a year of work! During this time, the software publisher was able to continue selling software without needing to give a second thought to piracy. A similar protection system was being developed by Cloakware (now Irdeto), however the project was dropped. I believe that if this kind of protection system was combined with advanced virtualisation techniques, the results would be catastrophic for both users and antivirus companies. Thankfully, such systems are very difficult to build and are not commonly available.

Encryption of data and strings

This is the most commonly encountered method to hide sensitive information. Encryption is often used for string constants which contain, for example, the names of files to infect, the addresses of control servers, or even passwords to FTP servers or mailboxes (yes, although they are encrypted, passwords to private repositories are published, as hard as that is to believe) to which malware sometimes sends stolen data from the computers they infect. Communication between malware and its control servers is also often encrypted to keep its contents secret.

The Rustock rootkit used the RC4 encryption algorithm (one of the most common found in malware) to protect its own modules from analysis. During infection, a hardware ID was taken from the computer and used as an encryption key for a device file installed by the virus. The point of this was that this file would not work on any other computer (since the hardware ID did not match) and at the same time its analysis was impossible on another computer, (e.g. if the file was sent to an antivirus company for analysis). It was necessary to obtain the hardware ID of an infected computer and only then could the viral code be decrypted.

Well-known encryption algorithms (such as AES) utilise fixed tables of data, and analysis software (such as the PEiD signature scanner) can instantly detect them in executable files. This is a signal to an analyst that something encrypted is hiding in the code, and may be worthwhile investigating. For this reason, malware authors sometimes use dynamically generated encryption algorithms, which don't rouse suspicion, e.g.:

Listing 12.Dynamically generated decryption code obtained using the StringEncrypt service.

// encrypted with https://www.stringencrypt.com (v1.1.0) [C/C++]

// wszLabel = "C/C++ String Encryption"

wchar_t wszLabel[24] = { 0x2976, 0x2AF9, 0x289C, 0x2B9F, 0x2BA2, 0x2D05, 0x2688, 0x316B,

0x336E, 0x33D1, 0x3214, 0x3337, 0x2CDA, 0x277D, 0x3200, 0x34A3,

0x32C6, 0x3269, 0x32AC, 0x312F, 0x3392, 0x3255, 0x3258, 0x609B };

for (unsigned int Qqhvj = 0, JWqBw = 0; Qqhvj < 24; Qqhvj++)

{

JWqBw = wszLabel[Qqhvj];

JWqBw -= 0xA50D;

JWqBw += Qqhvj;

JWqBw = (((JWqBw & 0xFFFF) >> 15) | (JWqBw << 1)) & 0xFFFF;

JWqBw ^= 0xB0D1;

JWqBw ++;

JWqBw = (((JWqBw & 0xFFFF) >> 3) | (JWqBw << 13)) & 0xFFFF;

JWqBw = ~JWqBw;

JWqBw += 0xAF02;

JWqBw ^= Qqhvj;

JWqBw ^= 0x9FC1;

JWqBw -= 0xA9E0;

JWqBw = ~JWqBw;

JWqBw ++;

JWqBw = (((JWqBw & 0xFFFF) >> 3) | (JWqBw << 13)) & 0xFFFF;

JWqBw --;

wszLabel[Qqhvj] = JWqBw;

}

wprintf(wszLabel);Time bombs

Sometimes, malware programs are designed to activate after a certain time period. For instance, they may check the local time and commence operating after a precisely defined date. In this way such software may avoid arousing suspicion (since no changes are observed in the system). Thus, if preliminary analysis suggests that the program is not entirely clean, it is wise to perform further investigation.

Listing 13.Activating a program after a particular date.

#include <windows.h>

void MrMalware(void)

{

// malicious code

MessageBox(NULL, "I'm a virus!", "Boo!", MB_ICONWARNING);

return;

}

int main()

{

SYSTEMTIME stLocalTime = { 0 };

// obtain the current time

GetLocalTime(&stLocalTime);

// activate the malicious code

// only after this date

if (stLocalTime.wYear >= 2013 && \

stLocalTime.wMonth >= 5 && \

stLocalTime.wDay >= 2)

{

MrMalware();

}

return 0;

}What can we do when we suspect that a program may contain a time bomb (which is not at all obvious)? The most straightforward solution is to adjust the system clock forward or back and observe changes in the system using monitoring tools. Despite their simplicity, time bombs are a very effective defence against the discovery of malicious behaviour.

Delayed execution

Aside from time bombs, certain malware programs employ time delays, for

instance based on timers. The basic idea is that the actual malicious

behaviour does not occur until, say, an hour after the program is launched.

The point of this is twofold. First, it misleads the analyst. On launching the

program, nothing happens. Conclusion? The software is clean and further analysis

is unnecessary. Second, time delays can fool emulators which are used in

antivirus software. Emulators step through suspected programs, trying to get as

far as possible through the code, emulating new instructions whenever they are

found (in the case of encrypted executables). However, emulators are subject to

time restrictions, because it doesn't make sense for a user to launch a program

and wait half an hour for the emulator to reach the end of the encrypted code.

A good emulator will detect such time delays (e.g. the Sleep()

function, delay loops, etc.), but not all possible time delays are taken into

account, and the many methods of measuring time in Windows mean that such delays

are a good defence against dynamic analysis of code.

Listing 14.Activation of malicious code after a certain time period.

#include <windows.h>

DWORD dwTimerId = 0;

const DWORD dwTimerEventId = 666;

// callback function activated after a designated time

VOID CALLBACK MrMalware(HWND hwnd, UINT uMsg, UINT idEvent, DWORD dwTime)

{

// malicious code

MessageBox(NULL, "I'm a virus!", "Boo!", MB_ICONWARNING);

return;

}

int main()

{

// activate malicious code 5 seconds

// after the program is launched

dwTimerId = SetTimer(NULL, dwTimerEventId, 5 * 1000, MrMalware);

MessageBox(NULL, "I'm a friendly program", "Hi!", MB_ICONINFORMATION);

return 0;

}Cryptographic signatures

Cryptographic signatures are employed to sign applications. They are a sort of guarantee that the digitally signed application comes from a trusted source. Certain antivirus programs do not bother scanning applications that possess digital signatures. To obtain a digital certificate, the identities of both the individual and the business in whose name the certificate is signed, must be verified. Without appropriate documents (such as bank statements, telephone bills, and scans of ID documents) it is impossible to obtain a certificate from a legitimate organisation. However, digital certificates do get stolen from time to time, for instance in a hacker attack, and are then used to sign malware. Such examples are infrequent, but they have caused embarrassment for antivirus programs which automatically gave the all-clear to signed applications.

I'll share a humorous anecdote: at one point, the antivirus software of the company Comodo flagged even digitally signed applications as potentially malicious, along with a message informing the user that, although the application was digitally signed, the signature was not issued by Comodo (which was also in the business of issuing digital signatures).

Online scanners

For obvious reasons, malware authors want to know if their malware will be detected by antivirus programs. Installing all the popular antivirus applications would be time-consuming (not to mention the fact that they aren't compatible with each other), and so malware authors make use of online services, which scan uploaded files with dozens of antivirus applications. If the VirusTotal service (recently purchased by Google) came to mind, you were just a little bit off. Solutions like VirusTotal or the online scanner Jotti aren't actually used by malware authors who know what they're doing. This is because all files submitted to these services are shared with antivirus firms for analysis. Instead, malware authors use sites like NoVirusThanks or AntiScan.Me, which pride themselves on not handing files over to antivirus companies.

Conclusion

From my experience and observations, it is evident that the battle between those who analyse software, and those who create software protection, will never be over. Funnily enough, on both sides of the battle are those with good intent – like antivirus firms, and people who create or use software protection for legitimate purposes – and those with malicious intent, like crackers who try to break software protection, and those who create or use software protection to allow malicious code to get through our defences. Looking at how tools have evolved over time, beginning with exe-packers up to the current usage of virtualizers, can we speculate as to what's coming next? My view is that everything will go in the direction of virtualized code, but even more advanced than at present. This can be seen from current trends in the market for native application protectors, and the appearance of virtualizers for .NET applications. How will antivirus companies handle this? Just the same as always, I think – with the arduous work of their clever employees.